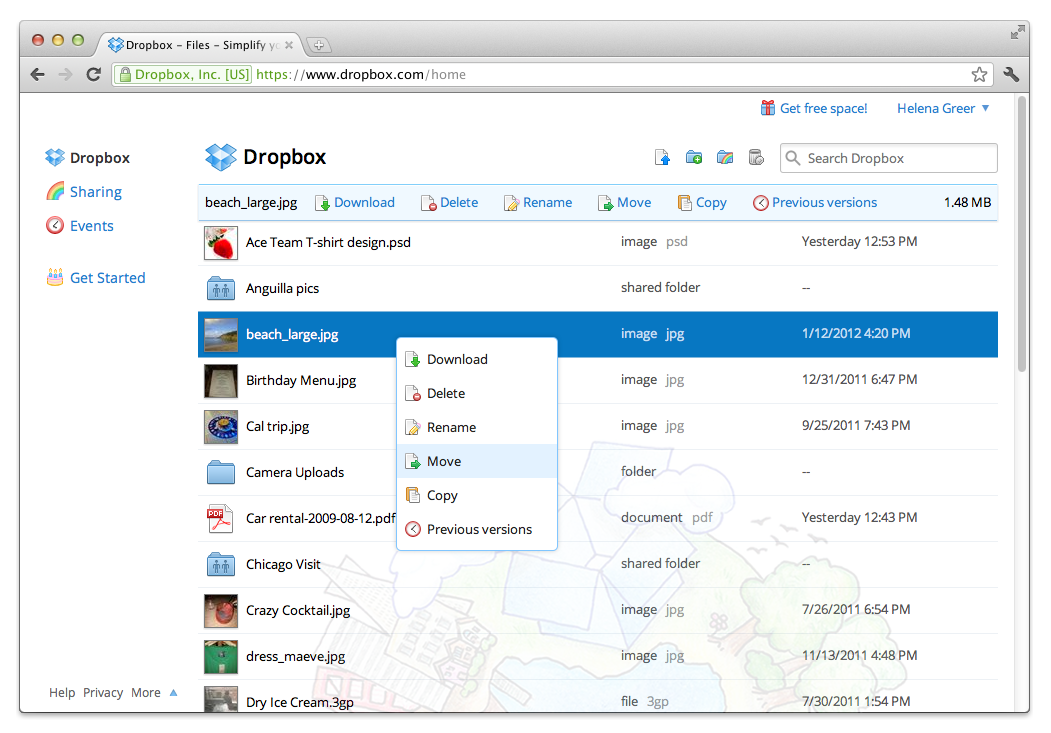

Having graphing for that webserver would be great, but if it’s on 1 or 5 minute intervals like most systems it might not be fine-grained enough (or maybe you only want to look at a certain kind of request, or whatever). Let’s say you’re trying to debug something in your webserver, and you want to know if maybe there’s been a spike of activity recently and all you have are the logs. If you haven’t used the shell much, it can be eye opening how much faster certain tasks are. If there is a way to do this, it would also be cool to have markings on the graph that point to events you can annotate such as code push, or AWS outage. “CPU” is cheating, it’s actually just the average response time minus everything else we factored out. Our actual graph had a bunch more segments, so imagine how much screen real estate this saves when you’re trying to figure shit out. So you can see there was a spike in response time at around 1:00 which was caused by something in MySQL commit phase.

Each segment represents a partition of work. The top line represents average response time on the site (we had one of these graphs for web traffic, and one for client traffic).

Here is one summary graph that we found the most useful: Of course, when you have thousands of stats it becomes tough to just “look at the graphs” to find anomalies. Even stats as fine-grained as “average time to read a memcached key” that happened dozens of times per request performed fine.

Very scalable, and this made it possible for us to track thousands of stats in near real time. Every second we’d post that stat to a time-keyed bucket (timestamp mod something) in memcached, and on a central machine, every minute, the buckets in memcached would get cleared out, aggregated, and posted to ganglia. Every time something happened that we wanted graphed, we would store it in a thread-local memory buffer. We chose to implement a solution in a combination of memcached, cron, and ganglia. Most out-of-the-box monitoring solutions aren’t meant to handle this sort of load, and we wanted a one-line way of adding stats so that we didn’t have to think about whether it costed anything, or fuss around with configuration files just to add a stat (decreasing the friction of testing and monitoring is a big priority). Another thing that became increasingly useful while scaling was to have thousands of custom stats aggregated over thousands of servers graphed.

0 kommentar(er)

0 kommentar(er)